Most people encounter AI automation without realizing it. A job application is filtered before a human ever reads it. A customer service issue is resolved without speaking to a person. A delivery route is chosen by an algorithm long before a driver turns the key. These moments feel ordinary, almost invisible, yet together they signal a major shift in how decisions and labor are being handed over to machines.

AI automation is not arriving with dramatic announcements or clear boundaries. It is slipping quietly into everyday systems, taking over tasks once managed by people and reshaping expectations about speed, efficiency, and reliability. What makes this shift different from earlier forms of automation is not just that machines are working faster, but that they are increasingly deciding, adapting, and optimizing on their own.

At its core, AI automation refers to systems that can perform tasks with minimal human involvement while learning from data. Unlike traditional automated machines that follow fixed instructions, AI-driven systems adjust their behavior based on patterns they observe. This allows them to handle complex activities such as sorting job applications, managing supply chains, moderating online content, or predicting equipment failures. For businesses and institutions, this offers efficiency on a scale that was previously impossible.

One of the clearest benefits of AI automation is productivity. Automated systems can operate continuously without fatigue, process vast amounts of information in seconds, and reduce routine errors. In workplaces, AI handles repetitive administrative tasks like scheduling, data entry, and reporting, freeing employees to focus on problem-solving and creative work. In manufacturing, AI-powered robots improve precision and reduce workplace injuries by taking over dangerous or physically demanding tasks.

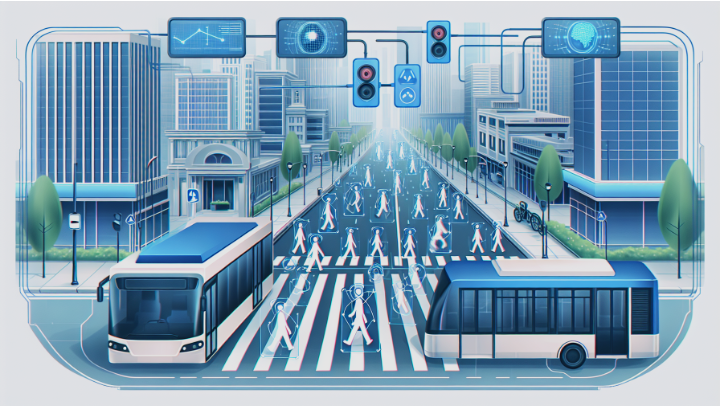

AI automation has also reshaped services that people rely on daily. In healthcare, automated systems help manage patient records, predict appointment demand, and flag potential health risks for doctors to review. In transport and logistics, AI optimizes routes, predicts delays, and coordinates deliveries more efficiently. These applications save time and resources while improving reliability for users.

However, alongside these gains come serious challenges. One of the most debated concerns surrounding AI automation is its impact on employment. As more tasks become automated, certain jobs particularly routine or clerical roles are shrinking or disappearing. While AI also creates new roles, these often require advanced skills that not all workers have access to. Without retraining opportunities and social support, automation risks widening economic inequality rather than improving overall prosperity.

Another issue lies in automated decision-making. AI systems increasingly influence who gets a loan, a job interview, insurance coverage, or online visibility. When such decisions are automated, they can feel distant and difficult to question. If an AI system rejects an application or flags a person as high risk, the reasoning is often unclear. This lack of transparency can undermine trust, especially when outcomes have real consequences for people’s lives.

Bias is a further concern. AI systems learn from data, and if that data reflects historical inequalities or flawed assumptions, automation can reinforce those patterns at scale. What appears efficient and objective may quietly reproduce discrimination, simply faster and more consistently. Because automated systems are often treated as neutral tools, their decisions may go unchallenged, making errors harder to detect and correct.

AI automation also concentrates power. Large organizations with access to data, computing resources, and technical expertise gain significant advantages over smaller competitors. This can lead to market dominance, reduced competition, and fewer choices for consumers and workers alike. When decision-making becomes centralized within automated systems, individual agency can be diminished.

Accountability presents another challenge. When something goes wrong in an automated system, responsibility is often unclear. Is the fault with the developer who designed the algorithm, the organization that deployed it, or the system itself? Current legal and ethical frameworks struggle to address these questions, especially when automated decisions affect large numbers of people at once.

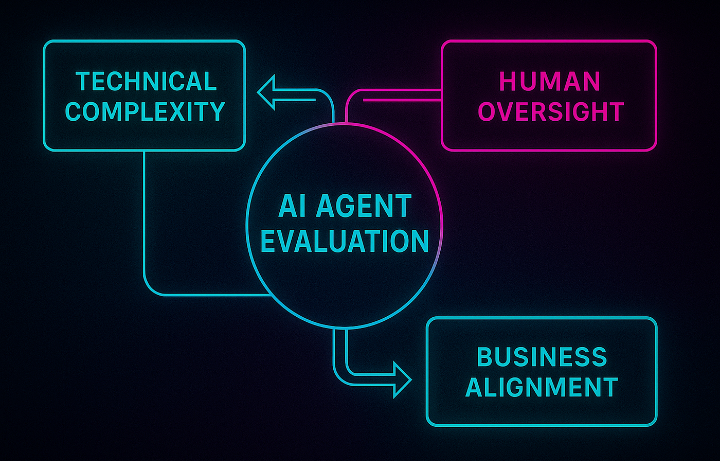

Despite these risks, AI automation is not inherently harmful. Its value depends on how it is designed, deployed, and governed. When used responsibly, automation can reduce human error, remove people from dangerous work, and improve access to essential services. The goal is not to eliminate human involvement, but to redefine it. Humans must remain responsible for setting goals, reviewing outcomes, and making final judgments where values and ethics are involved.

The challenge, then, is balance. AI automation should support human decision-making, not replace it entirely. Transparency, oversight, and the ability to challenge automated outcomes are essential. Education and retraining programs must keep pace with technological change to ensure workers are not left behind.

AI automation is shaping the future quietly, not through dramatic upheaval, but through small, cumulative changes that alter how systems operate and how decisions are made. Whether this future leads to greater opportunity or deeper inequality will depend less on the technology itself and more on the choices society makes about how far automation is allowed to go, and who remains accountable when machines take action.

In the end, AI may automate processes, but responsibility cannot be automated. That remains, and must remain, human.

AI automation is not arriving with dramatic announcements or clear boundaries. It is slipping quietly into everyday systems, taking over tasks once managed by people and reshaping expectations about speed, efficiency, and reliability. What makes this shift different from earlier forms of automation is not just that machines are working faster, but that they are increasingly deciding, adapting, and optimizing on their own.

At its core, AI automation refers to systems that can perform tasks with minimal human involvement while learning from data. Unlike traditional automated machines that follow fixed instructions, AI-driven systems adjust their behavior based on patterns they observe. This allows them to handle complex activities such as sorting job applications, managing supply chains, moderating online content, or predicting equipment failures. For businesses and institutions, this offers efficiency on a scale that was previously impossible.

One of the clearest benefits of AI automation is productivity. Automated systems can operate continuously without fatigue, process vast amounts of information in seconds, and reduce routine errors. In workplaces, AI handles repetitive administrative tasks like scheduling, data entry, and reporting, freeing employees to focus on problem-solving and creative work. In manufacturing, AI-powered robots improve precision and reduce workplace injuries by taking over dangerous or physically demanding tasks.

AI automation has also reshaped services that people rely on daily. In healthcare, automated systems help manage patient records, predict appointment demand, and flag potential health risks for doctors to review. In transport and logistics, AI optimizes routes, predicts delays, and coordinates deliveries more efficiently. These applications save time and resources while improving reliability for users.

However, alongside these gains come serious challenges. One of the most debated concerns surrounding AI automation is its impact on employment. As more tasks become automated, certain jobs particularly routine or clerical roles are shrinking or disappearing. While AI also creates new roles, these often require advanced skills that not all workers have access to. Without retraining opportunities and social support, automation risks widening economic inequality rather than improving overall prosperity.

Another issue lies in automated decision-making. AI systems increasingly influence who gets a loan, a job interview, insurance coverage, or online visibility. When such decisions are automated, they can feel distant and difficult to question. If an AI system rejects an application or flags a person as high risk, the reasoning is often unclear. This lack of transparency can undermine trust, especially when outcomes have real consequences for people’s lives.

Bias is a further concern. AI systems learn from data, and if that data reflects historical inequalities or flawed assumptions, automation can reinforce those patterns at scale. What appears efficient and objective may quietly reproduce discrimination, simply faster and more consistently. Because automated systems are often treated as neutral tools, their decisions may go unchallenged, making errors harder to detect and correct.

AI automation also concentrates power. Large organizations with access to data, computing resources, and technical expertise gain significant advantages over smaller competitors. This can lead to market dominance, reduced competition, and fewer choices for consumers and workers alike. When decision-making becomes centralized within automated systems, individual agency can be diminished.

Accountability presents another challenge. When something goes wrong in an automated system, responsibility is often unclear. Is the fault with the developer who designed the algorithm, the organization that deployed it, or the system itself? Current legal and ethical frameworks struggle to address these questions, especially when automated decisions affect large numbers of people at once.

Despite these risks, AI automation is not inherently harmful. Its value depends on how it is designed, deployed, and governed. When used responsibly, automation can reduce human error, remove people from dangerous work, and improve access to essential services. The goal is not to eliminate human involvement, but to redefine it. Humans must remain responsible for setting goals, reviewing outcomes, and making final judgments where values and ethics are involved.

The challenge, then, is balance. AI automation should support human decision-making, not replace it entirely. Transparency, oversight, and the ability to challenge automated outcomes are essential. Education and retraining programs must keep pace with technological change to ensure workers are not left behind.

AI automation is shaping the future quietly, not through dramatic upheaval, but through small, cumulative changes that alter how systems operate and how decisions are made. Whether this future leads to greater opportunity or deeper inequality will depend less on the technology itself and more on the choices society makes about how far automation is allowed to go, and who remains accountable when machines take action.

In the end, AI may automate processes, but responsibility cannot be automated. That remains, and must remain, human.