We Should Never Target AI as the Source of Lies

In today’s digital climate, artificial intelligence is often blamed for misinformation, deception, and the erosion of trust. When synthetic videos circulate online or convincingly written but false articles appear, the instinctive reaction is to point at AI and declare it the culprit. Yet this framing oversimplifies a far more complex issue. We should never target AI itself as the source of lies. The true origin of deception remains human intent.

Lying requires awareness and purpose. It is a conscious act in which someone knowingly presents false information to mislead others. Artificial intelligence does not possess consciousness, beliefs, or moral agency. It does not know truth from falsehood. It does not intend to deceive. It generates outputs based on patterns in data and the instructions it receives. When AI produces incorrect or misleading content, it is not lying in a moral sense. It is reflecting probabilities, limitations, or the data it has been trained on.

The confusion arises because AI can communicate with remarkable fluency. Its responses can sound confident, structured, and authoritative. When errors occur, they appear deliberate because the delivery is polished. But fluency is not intent. Confidence is not awareness. AI systems do not decide to mislead; they calculate likely outputs based on input patterns.

The more pressing concern is how humans use these systems. AI can be deployed to generate fabricated news, impersonate voices, manipulate images, or automate disinformation campaigns. In these cases, the deception is not the machine’s initiative. It is a human strategy amplified by technology. Targeting AI as the liar risks diverting responsibility away from those who design, misuse, or weaponize these tools.

Blaming AI also obscures the broader structural challenges within the information ecosystem. Misinformation existed long before artificial intelligence became widespread. False narratives, propaganda, and manipulation have been persistent features of human communication. AI increases the speed and scale at which such content can spread, but it does not invent the impulse to deceive. The impulse is human.

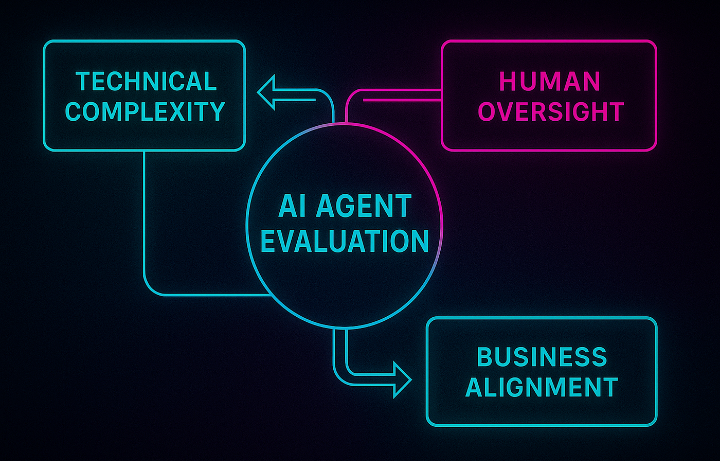

This distinction is critical for accountability. If AI is framed as inherently deceptive, society may overlook the governance, regulation, and ethical standards required to manage its use. Effective oversight focuses on transparency, responsible deployment, and consequences for misuse. It does not treat the technology as morally culpable.

That does not mean AI is without risk. Its ability to generate realistic text, audio, and video complicates verification processes and challenges traditional markers of authenticity. But these challenges call for improved digital literacy, stronger authentication systems, and clearer disclosure standards. They do not justify attributing moral wrongdoing to a tool.

We must also acknowledge that AI can strengthen truth-seeking efforts. The same technology capable of generating content can assist in detecting manipulated media, identifying misinformation patterns, and supporting fact-checking initiatives. AI can analyze vast datasets faster than humans, helping uncover inconsistencies or fabricated materials. Its impact depends on how it is directed.

Framing AI as a liar oversimplifies public understanding and fosters unnecessary fear. It encourages the belief that technology itself has intentions. In reality, AI systems operate within boundaries defined by human design and governance. Responsibility lies with creators, institutions, regulators, and users who shape those boundaries.

As AI continues to integrate into journalism, education, governance, and communication, the conversation must remain precise. We should critique misuse, demand transparency, and strengthen ethical standards. But we should never misattribute agency where none exists.

The preservation of truth in the information age does not depend on condemning AI. It depends on human accountability. Artificial intelligence reflects the values and choices embedded within it. If those values prioritize integrity, transparency, and fairness, AI can support trust rather than undermine it.

The technology itself is not the adversary. Deception has always been a human act. AI is a powerful instrument, and like any instrument, its impact is determined by the hands that wield it.

In today’s digital climate, artificial intelligence is often blamed for misinformation, deception, and the erosion of trust. When synthetic videos circulate online or convincingly written but false articles appear, the instinctive reaction is to point at AI and declare it the culprit. Yet this framing oversimplifies a far more complex issue. We should never target AI itself as the source of lies. The true origin of deception remains human intent.

Lying requires awareness and purpose. It is a conscious act in which someone knowingly presents false information to mislead others. Artificial intelligence does not possess consciousness, beliefs, or moral agency. It does not know truth from falsehood. It does not intend to deceive. It generates outputs based on patterns in data and the instructions it receives. When AI produces incorrect or misleading content, it is not lying in a moral sense. It is reflecting probabilities, limitations, or the data it has been trained on.

The confusion arises because AI can communicate with remarkable fluency. Its responses can sound confident, structured, and authoritative. When errors occur, they appear deliberate because the delivery is polished. But fluency is not intent. Confidence is not awareness. AI systems do not decide to mislead; they calculate likely outputs based on input patterns.

The more pressing concern is how humans use these systems. AI can be deployed to generate fabricated news, impersonate voices, manipulate images, or automate disinformation campaigns. In these cases, the deception is not the machine’s initiative. It is a human strategy amplified by technology. Targeting AI as the liar risks diverting responsibility away from those who design, misuse, or weaponize these tools.

Blaming AI also obscures the broader structural challenges within the information ecosystem. Misinformation existed long before artificial intelligence became widespread. False narratives, propaganda, and manipulation have been persistent features of human communication. AI increases the speed and scale at which such content can spread, but it does not invent the impulse to deceive. The impulse is human.

This distinction is critical for accountability. If AI is framed as inherently deceptive, society may overlook the governance, regulation, and ethical standards required to manage its use. Effective oversight focuses on transparency, responsible deployment, and consequences for misuse. It does not treat the technology as morally culpable.

That does not mean AI is without risk. Its ability to generate realistic text, audio, and video complicates verification processes and challenges traditional markers of authenticity. But these challenges call for improved digital literacy, stronger authentication systems, and clearer disclosure standards. They do not justify attributing moral wrongdoing to a tool.

We must also acknowledge that AI can strengthen truth-seeking efforts. The same technology capable of generating content can assist in detecting manipulated media, identifying misinformation patterns, and supporting fact-checking initiatives. AI can analyze vast datasets faster than humans, helping uncover inconsistencies or fabricated materials. Its impact depends on how it is directed.

Framing AI as a liar oversimplifies public understanding and fosters unnecessary fear. It encourages the belief that technology itself has intentions. In reality, AI systems operate within boundaries defined by human design and governance. Responsibility lies with creators, institutions, regulators, and users who shape those boundaries.

As AI continues to integrate into journalism, education, governance, and communication, the conversation must remain precise. We should critique misuse, demand transparency, and strengthen ethical standards. But we should never misattribute agency where none exists.

The preservation of truth in the information age does not depend on condemning AI. It depends on human accountability. Artificial intelligence reflects the values and choices embedded within it. If those values prioritize integrity, transparency, and fairness, AI can support trust rather than undermine it.

The technology itself is not the adversary. Deception has always been a human act. AI is a powerful instrument, and like any instrument, its impact is determined by the hands that wield it.